Core Layers¶

Additive¶

-

class

deep_qa.layers.additive.Additive(initializer='glorot_uniform', **kwargs)[source]¶ Bases:

deep_qa.layers.masked_layer.MaskedLayerThis

Layeradds a parameter value to each cell in the input tensor, similar to a bias vector in aDenselayer, but this only adds, one value per cell. The value to add is learned.Parameters: initializer: str, optional (default=’glorot_uniform’)

Keras initializer for the additive weight.

-

build(input_shape)[source]¶ Creates the layer weights.

Must be implemented on all layers that have weights.

- # Arguments

- input_shape: Keras tensor (future input to layer)

- or list/tuple of Keras tensors to reference for weight shape computations.

-

get_config()[source]¶ Returns the config of the layer.

A layer config is a Python dictionary (serializable) containing the configuration of a layer. The same layer can be reinstantiated later (without its trained weights) from this configuration.

The config of a layer does not include connectivity information, nor the layer class name. These are handled by Container (one layer of abstraction above).

- # Returns

- Python dictionary.

-

BiGRUIndexSelector¶

-

class

deep_qa.layers.bigru_index_selector.BiGRUIndexSelector(target_index, **kwargs)[source]¶ Bases:

deep_qa.layers.masked_layer.MaskedLayerThis Layer takes 3 inputs: a tensor of document indices, the seq2seq GRU output over the document feeding it in forward, the seq2seq GRU output over the document feeding it in backwards. It also takes one parameter, the word index whose biGRU outputs we want to extract

- Inputs:

- document indices: shape

(batch_size, document_length) - forward GRU output: shape

(batch_size, document_length, GRU hidden dim) - backward GRU output: shape

(batch_size, document_length, GRU hidden dim)

- document indices: shape

- Output:

- GRU outputs at index: shape

(batch_size, GRU hidden dim * 2)

- GRU outputs at index: shape

Parameters: target_index : int

The word index to extract the forward and backward GRU output from.

-

compute_mask(inputs, mask=None)[source]¶ Computes an output mask tensor.

- # Arguments

- inputs: Tensor or list of tensors. mask: Tensor or list of tensors.

- # Returns

- None or a tensor (or list of tensors,

- one per output tensor of the layer).

-

compute_output_shape(input_shapes)[source]¶ Computes the output shape of the layer.

Assumes that the layer will be built to match that input shape provided.

- # Arguments

- input_shape: Shape tuple (tuple of integers)

- or list of shape tuples (one per output tensor of the layer). Shape tuples can include None for free dimensions, instead of an integer.

- # Returns

- An input shape tuple.

-

get_config()[source]¶ Returns the config of the layer.

A layer config is a Python dictionary (serializable) containing the configuration of a layer. The same layer can be reinstantiated later (without its trained weights) from this configuration.

The config of a layer does not include connectivity information, nor the layer class name. These are handled by Container (one layer of abstraction above).

- # Returns

- Python dictionary.

ComplexConcat¶

-

class

deep_qa.layers.complex_concat.ComplexConcat(combination: str, axis: int = -1, **kwargs)[source]¶ Bases:

deep_qa.layers.masked_layer.MaskedLayerThis

LayerdoesK.concatenate()on a collection of tensors, but allows for more complex operations thanMerge(mode='concat'). Specifically, you can perform an arbitrary number of elementwise linear combinations of the vectors, and concatenate all of the results. If you do not need to do this, you should use the regularMergelayer instead of thisComplexConcat.Because the inputs all have the same shape, we assume that the masks are also the same, and just return the first mask.

- Input:

- A list of tensors. The tensors that you combine must have the same shape, so that we can do elementwise operations on them, and all tensors must have the same number of dimensions, and match on all dimensions except the concatenation axis.

- Output:

- A tensor with some combination of the input tensors concatenated along a specific dimension.

Parameters: axis : int

The axis to use for

K.concatenate.combination: List of str

A comma-separated list of combinations to perform on the input tensors. These are either tensor indices (1-indexed), or an arithmetic operation between two tensor indices (valid operations:

*,+,-,/). For example, these are all valid combination parameters:"1,2","1,2*3","1-2,2-1","1,1*1", and"1,2,1*2".-

compute_mask(inputs, mask=None)[source]¶ Computes an output mask tensor.

- # Arguments

- inputs: Tensor or list of tensors. mask: Tensor or list of tensors.

- # Returns

- None or a tensor (or list of tensors,

- one per output tensor of the layer).

-

compute_output_shape(input_shape)[source]¶ Computes the output shape of the layer.

Assumes that the layer will be built to match that input shape provided.

- # Arguments

- input_shape: Shape tuple (tuple of integers)

- or list of shape tuples (one per output tensor of the layer). Shape tuples can include None for free dimensions, instead of an integer.

- # Returns

- An input shape tuple.

-

get_config()[source]¶ Returns the config of the layer.

A layer config is a Python dictionary (serializable) containing the configuration of a layer. The same layer can be reinstantiated later (without its trained weights) from this configuration.

The config of a layer does not include connectivity information, nor the layer class name. These are handled by Container (one layer of abstraction above).

- # Returns

- Python dictionary.

Highway¶

L1Normalize¶

-

class

deep_qa.layers.l1_normalize.L1Normalize(**kwargs)[source]¶ Bases:

deep_qa.layers.masked_layer.MaskedLayerThis Layer normalizes a tensor by its L1 norm. This could just be a

Lambdalayer that calls ourtensors.l1_normalizefunction, except thatLambdalayers do not properly handle masked input.The expected input to this layer is a tensor of shape

(batch_size, x), with an optional mask of the same shape. We also accept as input a tensor of shape(batch_size, x, 1), which will be squeezed to shape(batch_size, x)(though the mask must still be of shape(batch_size, x)).We give no output mask, as we expect this to only be used at the end of the model, to get a final probability distribution over class labels. If you need this to propagate the mask for your model, it would be pretty easy to change it to optionally do so - submit a PR.

-

compute_mask(inputs, mask=None)[source]¶ Computes an output mask tensor.

- # Arguments

- inputs: Tensor or list of tensors. mask: Tensor or list of tensors.

- # Returns

- None or a tensor (or list of tensors,

- one per output tensor of the layer).

-

compute_output_shape(input_shape)[source]¶ Computes the output shape of the layer.

Assumes that the layer will be built to match that input shape provided.

- # Arguments

- input_shape: Shape tuple (tuple of integers)

- or list of shape tuples (one per output tensor of the layer). Shape tuples can include None for free dimensions, instead of an integer.

- # Returns

- An input shape tuple.

-

NoisyOr¶

-

class

deep_qa.layers.noisy_or.BetweenZeroAndOne[source]¶ Bases:

keras.constraints.ConstraintConstrains the weights to be between zero and one

-

class

deep_qa.layers.noisy_or.NoisyOr(axis=-1, name='noisy_or', param_init='uniform', noise_param_constraint=None, **kwargs)[source]¶ Bases:

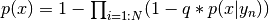

deep_qa.layers.masked_layer.MaskedLayerThis layer takes as input a tensor of probabilities and calculates the noisy-or probability across a given axis based on the noisy-or equation:

where :math`q` is the noise parameter.

- Inputs:

- probabilities: shape

(batch, ..., N, ...)Optionally takes a mask of the same shape, where N is the number of y’s in the above equation (i.e. the number of probabilities being combined in the product), in the dimension corresponding to the specified axis.

- probabilities: shape

- Output:

- X: shape

(batch, ..., ...)The output has one less dimension than the input, and has an optional mask of the same shape. The lost dimension corresponds to the specified axis. The output mask is the result ofK.any()on the input mask, along the specified axis.

- X: shape

Parameters: axis : int, default=-1

The axis over which to combine probabilities.

name : string, default=’noisy_or’

Name of the layer, ued to debug both the layer and its parameter.

param_init : string, default=’uniform’

The initialization of the noise parameter.

noise_param_constraint : Keras Constraint, default=None

Optional, a constraint which would be applied to the noise parameter.

OptionAttentionSum¶

-

class

deep_qa.layers.option_attention_sum.OptionAttentionSum(multiword_option_mode='mean', **kwargs)[source]¶ Bases:

deep_qa.layers.masked_layer.MaskedLayerThis Layer takes three inputs: a tensor of document indices, a tensor of document probabilities, and a tensor of answer options. In addition, it takes a parameter: a string describing how to calculate the probability of options that consist of multiple words. We compute the probability of each of the answer options in the fashion described in the paper “Text Comprehension with the Attention Sum Reader Network” (Kadlec et. al 2016).

- Inputs:

- document indices: shape

(batch_size, document_length) - document probabilities: shape

(batch_size, document_length) - options: shape

(batch size, num_options, option_length)

- document indices: shape

- Output:

- option_probabilities

(batch_size, num_options)

- option_probabilities

-

compute_mask(inputs, mask=None)[source]¶ Computes an output mask tensor.

- # Arguments

- inputs: Tensor or list of tensors. mask: Tensor or list of tensors.

- # Returns

- None or a tensor (or list of tensors,

- one per output tensor of the layer).

-

compute_output_shape(input_shapes)[source]¶ Computes the output shape of the layer.

Assumes that the layer will be built to match that input shape provided.

- # Arguments

- input_shape: Shape tuple (tuple of integers)

- or list of shape tuples (one per output tensor of the layer). Shape tuples can include None for free dimensions, instead of an integer.

- # Returns

- An input shape tuple.

-

get_config()[source]¶ Returns the config of the layer.

A layer config is a Python dictionary (serializable) containing the configuration of a layer. The same layer can be reinstantiated later (without its trained weights) from this configuration.

The config of a layer does not include connectivity information, nor the layer class name. These are handled by Container (one layer of abstraction above).

- # Returns

- Python dictionary.

Overlap¶

-

class

deep_qa.layers.overlap.Overlap(**kwargs)[source]¶ Bases:

deep_qa.layers.masked_layer.MaskedLayerThis Layer takes 2 inputs: a

tensor_a(e.g. a document) and atensor_b(e.g. a question). It returns a one-hot vector suitable for feature representation with the same shape astensor_a, indicating at each index whether the element intensor_aappears intensor_b. Note that the output is not the same shape astensor_a.- Inputs:

- tensor_a: shape

(batch_size, length_a) - tensor_b shape

(batch_size, length_b)

- tensor_a: shape

- Output:

- Collection of one-hot vectors indicating

overlap: shape

(batch_size, length_a, 2)

- Collection of one-hot vectors indicating

overlap: shape

Notes

This layer is used to implement the “Question Evidence Common Word Feature” discussed in section 3.2.4 of Dhingra et. al, 2016.

-

compute_output_shape(input_shapes)[source]¶ Computes the output shape of the layer.

Assumes that the layer will be built to match that input shape provided.

- # Arguments

- input_shape: Shape tuple (tuple of integers)

- or list of shape tuples (one per output tensor of the layer). Shape tuples can include None for free dimensions, instead of an integer.

- # Returns

- An input shape tuple.

SubtractMinimum¶

-

class

deep_qa.layers.subtract_minimum.SubtractMinimum(axis: int, **kwargs)[source]¶ Bases:

deep_qa.layers.masked_layer.MaskedLayerThis layer is used to normalize across a tensor axis. Normalization is done by finding the minimum value across the specified axis, and then subtracting that value from all values (again, across the spcified axis). Note that this also works just fine if you want to find the minimum across more than one axis.

- Inputs:

- A tensor with arbitrary dimension, and a mask of the same shape (currently doesn’t support masks with other shapes).

- Output:

- The same tensor, with the minimum across one (or more) of the dimensions subtracted.

Parameters: axis: int

The axis (or axes) across which to find the minimum. Can be a single int, a list of ints, or None. We just call K.min with this parameter, so anything that’s valid there works here too.

-

compute_mask(inputs, mask=None)[source]¶ Computes an output mask tensor.

- # Arguments

- inputs: Tensor or list of tensors. mask: Tensor or list of tensors.

- # Returns

- None or a tensor (or list of tensors,

- one per output tensor of the layer).

-

compute_output_shape(input_shape)[source]¶ Computes the output shape of the layer.

Assumes that the layer will be built to match that input shape provided.

- # Arguments

- input_shape: Shape tuple (tuple of integers)

- or list of shape tuples (one per output tensor of the layer). Shape tuples can include None for free dimensions, instead of an integer.

- # Returns

- An input shape tuple.

-

get_config()[source]¶ Returns the config of the layer.

A layer config is a Python dictionary (serializable) containing the configuration of a layer. The same layer can be reinstantiated later (without its trained weights) from this configuration.

The config of a layer does not include connectivity information, nor the layer class name. These are handled by Container (one layer of abstraction above).

- # Returns

- Python dictionary.

VectorMatrixMerge¶

-

class

deep_qa.layers.vector_matrix_merge.VectorMatrixMerge(concat_axis: int, mask_concat_axis: int = None, propagate_mask: bool = True, **kwargs)[source]¶ Bases:

deep_qa.layers.masked_layer.MaskedLayerThis

Layertakes a tensor withKmodes and a collection of other tensors withK - 1modes, and concatenates the lower-order tensors at the beginning of the higher-order tensor along a given mode. We call this a vector-matrix merge to evoke the notion of appending vectors onto a matrix, but this will also work with higher-order tensors.For example, if you have a memory tensor of shape

(batch_size, knowledge_length, encoding_dim), containingknowledge_lengthencoded sentences, you could use this layer to concatenateNindividual encoded sentences with it, resulting in a tensor of shape(batch_size, N + knowledge_length, encoding_dim).This layer supports masking - we will pass through whatever mask you have on the matrix, and concatenate ones to it, similar to how to we concatenate the inputs. We need to know what axis to do that concatenation on, though - we’ll default to the input concatenation axis, but you can specify a different one if you need to. We just ignore masks on the vectors, because doing the right thing with masked vectors here is complicated. If you want to handle that later, submit a PR.

This

Layeris essentially the opposite of aVectorMatrixSplit.Parameters: concat_axis: int

The axis to concatenate the vectors and matrix on.

mask_concat_axis: int, optional (default=None)

The axis to concatenate the masks on (defaults to

concat_axisifNone)-

compute_mask(inputs, mask=None)[source]¶ Computes an output mask tensor.

- # Arguments

- inputs: Tensor or list of tensors. mask: Tensor or list of tensors.

- # Returns

- None or a tensor (or list of tensors,

- one per output tensor of the layer).

-

compute_output_shape(input_shapes)[source]¶ Computes the output shape of the layer.

Assumes that the layer will be built to match that input shape provided.

- # Arguments

- input_shape: Shape tuple (tuple of integers)

- or list of shape tuples (one per output tensor of the layer). Shape tuples can include None for free dimensions, instead of an integer.

- # Returns

- An input shape tuple.

-

get_config()[source]¶ Returns the config of the layer.

A layer config is a Python dictionary (serializable) containing the configuration of a layer. The same layer can be reinstantiated later (without its trained weights) from this configuration.

The config of a layer does not include connectivity information, nor the layer class name. These are handled by Container (one layer of abstraction above).

- # Returns

- Python dictionary.

-

VectorMatrixSplit¶

-

class

deep_qa.layers.vector_matrix_split.VectorMatrixSplit(split_axis: int, mask_split_axis: int = None, propagate_mask: bool = True, **kwargs)[source]¶ Bases:

deep_qa.layers.masked_layer.MaskedLayerThis Layer takes a tensor with K modes and splits it into a tensor with K - 1 modes and a tensor with K modes, but one less row in one of the dimensions. We call this a vector-matrix split to evoke the notion of taking a row- (or column-) vector off of a matrix and returning both the vector and the remaining matrix, but this will also work with higher-order tensors.

For example, if you have a sentence that has a combined (word + characters) representation of the tokens in the sentence, you’d have a tensor of shape (batch_size, sentence_length, word_length + 1). You could split that using this Layer into a tensor of shape (batch_size, sentence_length) for the word tokens in the sentence, and a tensor of shape (batch_size, sentence_length, word_length) for the character for each word token.

This layer supports masking - we will split the mask the same way that we split the inputs.

This Layer is essentially the opposite of a VectorMatrixMerge.

-

compute_mask(inputs, input_mask=None)[source]¶ Computes an output mask tensor.

- # Arguments

- inputs: Tensor or list of tensors. mask: Tensor or list of tensors.

- # Returns

- None or a tensor (or list of tensors,

- one per output tensor of the layer).

-

compute_output_shape(input_shape)[source]¶ Computes the output shape of the layer.

Assumes that the layer will be built to match that input shape provided.

- # Arguments

- input_shape: Shape tuple (tuple of integers)

- or list of shape tuples (one per output tensor of the layer). Shape tuples can include None for free dimensions, instead of an integer.

- # Returns

- An input shape tuple.

-

get_config()[source]¶ Returns the config of the layer.

A layer config is a Python dictionary (serializable) containing the configuration of a layer. The same layer can be reinstantiated later (without its trained weights) from this configuration.

The config of a layer does not include connectivity information, nor the layer class name. These are handled by Container (one layer of abstraction above).

- # Returns

- Python dictionary.

-